Update on Ethics in AI for Advanced Medical Devices

Artificial Intelligence (AI) continues revolutionizing healthcare, including integration into advanced medical devices. These innovations promise enhanced diagnostics, personalized treatment plans, and real-time patient monitoring, significantly improving patient outcomes.

However, using AI raises many ethical questions, too. From digital health data privacy to bias to AI transparency, the ascendance of this new technology raises many moral issues. Stakeholders must set aside competing interests to ensure the ethical use of artificial intelligence.

Progress on AI Ethics in Healthcare

The broader healthcare sector has already begun formulating ethics declarations related to machine learning. Fundamental principles such as fairness, accountability, transparency, and privacy guide the development and implementation of AI systems.

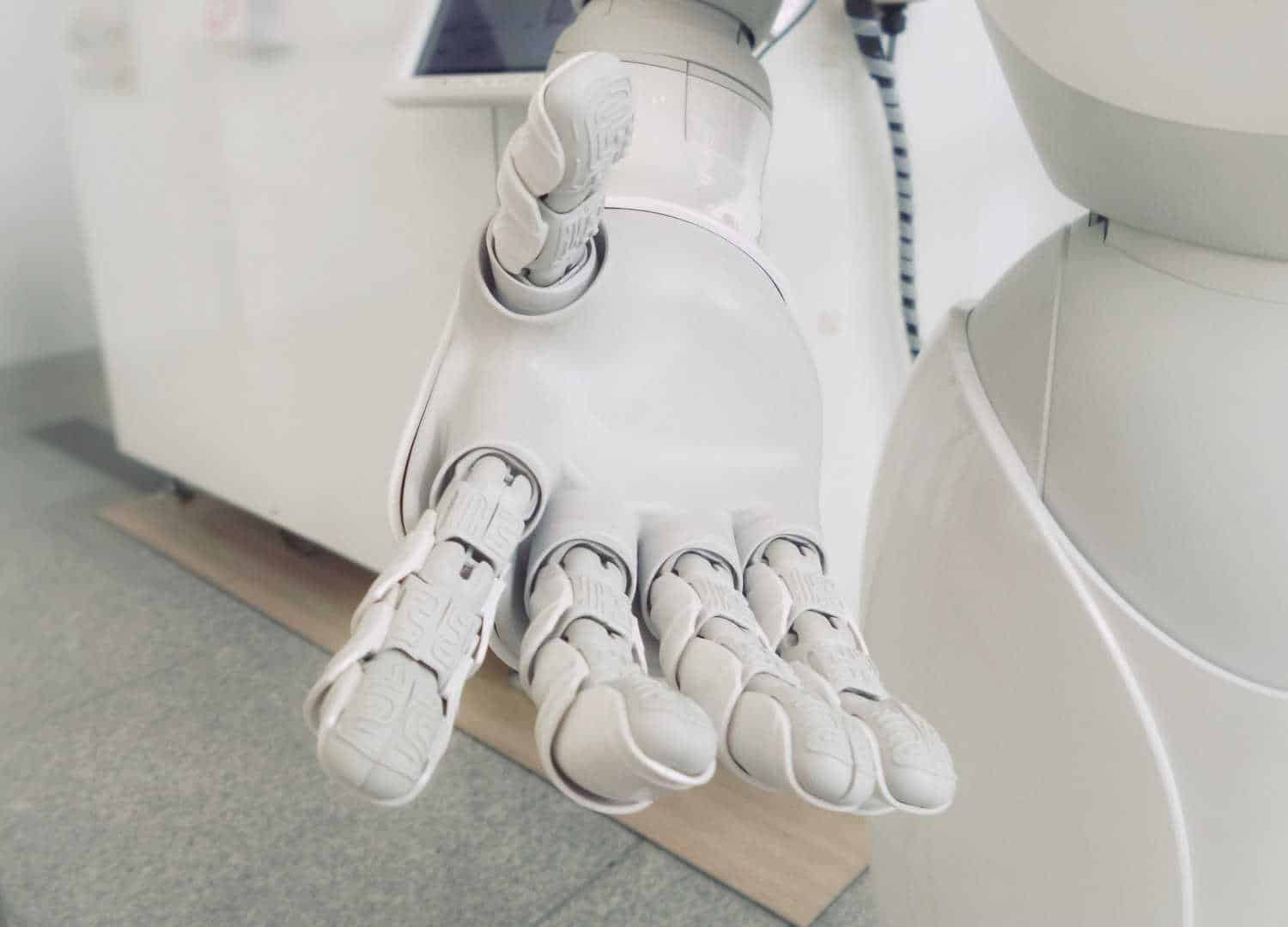

Regulatory guidance often reflects ethical undertones. On the regulatory side, the chart below shows the progress of AI/ML Regulatory guidance.

Source: Marco Daoura on LinkedIn

Various health care organizations and regulatory bodies have established standards and guidelines to ensure ethical artificial intelligence usage. For instance, the World Health Organization (WHO) emphasizes the need for equitable and transparent AI systems that prioritize patient welfare.

Ethical Guidelines and Regulatory Compliance

Various organizations and regulatory bodies are establishing ethical guidelines and frameworks to ensure that machine learning development adheres to ethical standards. Companies are adopting these ethical frameworks to guide their artificial intelligence development processes.

The FDA’s approach to regulating AI and machine learning (AI/ML) in medical devices focuses on ensuring safety, effectiveness, and ethical considerations. The FDA outlines a premarket review process for AI/ML-based devices, emphasizing the need for transparency, continuous monitoring, and adherence to guidelines.

This includes frameworks for managing modifications to AI/ML systems and ensuring they remain safe and effective throughout their lifecycle. These measures align with broader ethical considerations in healthcare AI, such as fairness, accountability, and patient privacy.

For more information, visit the FDA webpage on AI and Machine Learning in Software as a Medical Device.

Patient Privacy and Data Security

Ensuring patient privacy and data security is critical. Robust measures must be in place to enforce informed consent and protect sensitive patient information from breaches and unauthorized access.

Adhering to regulatory standards such as the Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR) can help maintain high data security and privacy levels. Best practices include data encryption, secure cloud storage, and regular security audits.

Bias and Discrimination in AI Algorithms

AI algorithms reflect the data data quality they are trained on. High-quality data is paramount in everything from clinical trials to drug discovery. The challenge is that bias in big data sets can lead to biased AI outcomes in health care, resulting in discrimination during medical decision-making, patient diagnosis, and treatment.

A March 2024 review by the Alan Turing Institute emphasizes the urgent need to address bias in medical devices, particularly those using AI. The review highlights that biased data can lead to inaccurate diagnoses and treatment recommendations, disproportionately affecting minority groups. It calls for the development of regulatory frameworks, diverse data sets, and continuous monitoring to ensure fairness and equity in AI-driven medical technologies.

For example, if an AI system is trained predominantly on data from a specific demographic, it may perform less accurately for other populations. If providers rely on this data for decision support, it can lead to disparities in healthcare delivery.

This can result in certain groups receiving less accurate diagnoses or inappropriate treatment recommendations, ultimately exacerbating health disparities.

Steps to Reduce Bias in AI Algorithms: Diverse and Representative Data Collection

Ensuring that the big data and more specialized data used to train AI models include a diverse and representative sample of the population is critical. Companies and researchers are actively working on creating and using data sets that better reflect the diversity of the population.

Algorithmic Fairness Techniques

Tools and techniques for detecting and mitigating bias in AI models are becoming more sophisticated. Techniques such as re-sampling, re-weighting, and adversarial debiasing correct imbalances in the data. These tools help identify where and how bias occurs in the data and algorithms, allowing developers to address these issues early in the development process.

Continuous Monitoring and Evaluation

Implementing continuous monitoring and evaluation processes helps identify and address bias throughout the lifecycle of an AI system.

Feedback loops from end-users, including healthcare providers and patients, are crucial for refining AI systems and highlighting biases that may not have been apparent during the initial development and testing phases.

Ethical Challenges with Transparency and Explainability

Developing AI models that are transparent and explainable helps in understanding how decisions are made. Techniques such as model interpretability and explainable AI (XAI) are being integrated into AI development to enable stakeholders, including healthcare providers and patients, to understand the rationale behind AI-driven decisions, increasing trust and enabling better oversight.

Lack of transparency can lead to mistrust and reluctance to adopt AI technologies, potentially hindering advancements in patient care.

Solutions to Increase Transparency and Explainability

Use of Simpler Algorithms: While complex models like deep learning are powerful, they are often black boxes. Using simpler, interpretable models can make it easier to understand how decisions are made.

Visual Explanations: Providing visual representations of how machine learning models make decisions can help users understand complex relationships in the data.

Interactive Dashboards: Implementing interactive dashboards that allow users to explore how artificial intelligence models work can enhance transparency. Users can adjust inputs and see how changes affect outcomes, providing a hands-on understanding of the model’s behavior.

Algorithm Audits: Regularly conducting algorithm audits to assess and document how AI models make decisions can ensure transparency. These audits can be shared with stakeholders to demonstrate the robustness and fairness of the models.

Human-Centered Design: Involving end-users (healthcare providers and patients) in the design and development process can ensure that the AI systems are user-friendly and transparent.

Regulatory Standards and Guidelines: Adhering to ethical guidelines and standards set by regulatory bodies ensures that artificial intelligence systems are transparent and explainable. Organizations like the FDA, EMA, and WHO provide frameworks promoting AI development and deployment transparency.

By implementing these solutions, stakeholders are working to enhance the transparency and explainability of AI systems. These efforts are crucial for building trust, ensuring accountability, and enabling the effective use of AI technologies in healthcare, ultimately leading to better patient outcomes and more ethical AI practices.

Human Oversight: The Ultimate Solution to AI Ethical Challenges

Human oversight is essential to navigating AI’s ethical issues. Healthcare professionals must be involved in AI-assisted decision-making processes to ensure that the outcomes are accurate and fair. Establishing clear accountability frameworks can also help maintain trust and responsibility in AI implementations.

Moving Ahead

The future of ethical AI lies in continuous research and development, policy evolution, and adherence to industry standards. At Galen Data, we keep close tabs on regulatory developments and emerging standards.

We understand the unique challenges of the fast-moving medical device data compliance and management world. We have the cloud expertise to help you stay up to date. Partner with Galen Data today to:

- Develop a secure and scalable data management plan.

- Leverage our expertise in medical device data and compliance.

- Focus on innovation while we handle the infrastructure.

Book a call with us today to discuss your specific needs and see how Galen Data can help you store, manage, and secure your medical device data at scale.